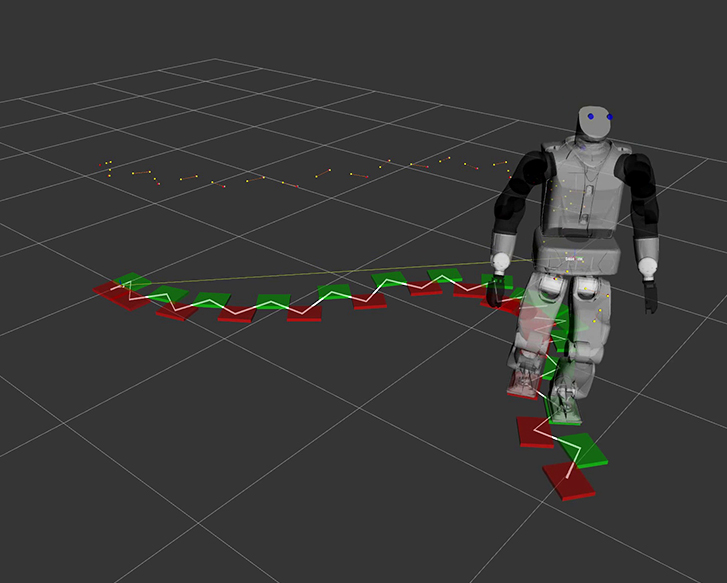

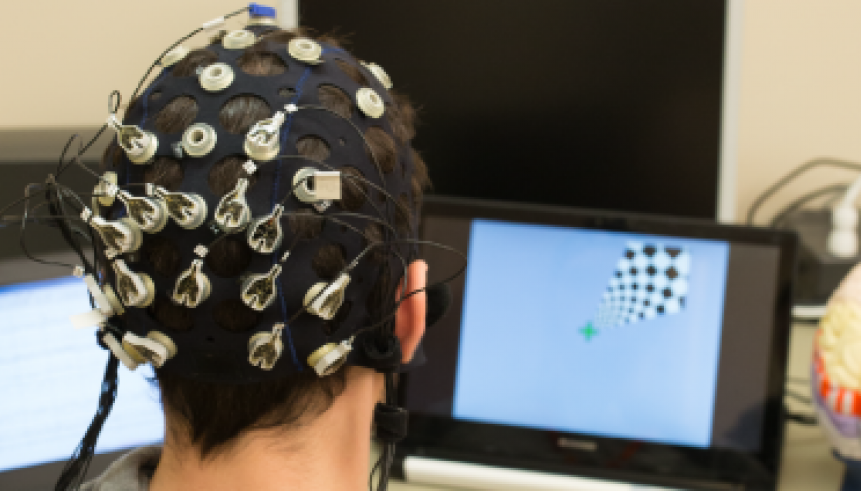

Yukon Labs represents a fundamental shift in how we approach humanoid robotics development. Our advanced neural framework enables faster learning, more natural movements, and unprecedented adaptability in complex environments.

Built from the ground up with human-centered design, Yukon Labs combines the latest advances in machine learning with specialized optimizations for real-time control systems, sensor fusion, and human-like locomotion.